Reimagine the Internet Day 2: Misinformation, Disinformation, and Media Literacy in a Less-Centralized Social Media Universe

Posted 5/11/2021

This week I’m attending the Reimagine the Internet mini-conference, a small and mostly academic discussion about decentralization from a corporate controlled Internet to realize a more socially positive network. This post is a collection of my notes from the second day of talks, following my previous post.

Today’s session was mostly on cultural patterns that lead to susceptibility to misinformation and conspiratorial thinking.

Scriptural Inference

I was not raised religiously, and rarely consider religion when analyzing online communities. One of today’s speakers is an anthropologist that’s spent extensive time in southern conservative groups, which include a very high number of practicing Protestants. They drew very direct comparisons between Protestant behavior - namely personal reading, interpretation, and discussion of the Christian Bible - with conservative political practices, especially with regards to:

-

Close readings and interpretation of original documents (the constitution, federalist papers, tax law, Trump speech transcripts) over “expert contextual analysis”

-

A preference for personal research over third party expertise or authority, in almost all contexts

This aligns with constitutional literalism, a mistrust of journalists, of academics, of climate science, of mask and vaccine science, of… a lot. It’s a compelling argument. There are also very direct comparisons to conspiracy groups like QAnon, which feature slogans like “do the research.”

It’s also an uncomfortable argument, because mistrust of authority is so often a good thing. Doctors regularly misdiagnose women’s health, or people of color. There’s the Tuskegee Study. There’s an immense pattern of police violence after generations of white parents telling their children the cops are there to protect everyone. There are countless reasons to mistrust the government.

But, at the same time, expertise and experience are valuable. Doctors have spent years at medical school, lawyers at law school, scientists at academic research, and they do generally know more than you about their field. How do we reconcile a healthy mistrust of authority and embracing the value of expertise?

One of the speakers recommended that experts help the public “do their own research”. For example, doctors could give their skeptical patients a list of vocabulary terms to search for material on their doctor’s diagnosis and recommended treatment, pointing them towards reputable material and away from misinformation. I love the idea of making academia and other expert fields more publicly accessible, but making all that information interpretable without years of training, and training field experts like doctors to communicate those findings to the public, is a daunting task.

Search Engine Reinforcement

For all the discussion of “doing your own research” in the previous section, the depth of research in many of these communities is shallow, limited to “I checked the first three to five results from a Google search and treated that as consensus on the topic.”

Of course, Google results are not consensus, but are directed both by keyword choice (“illegal aliens California” and “undocumented immigrants California” return quite different results despite having nominally the same meaning), and past searches and clicks to determine “relevancy”. This works well for helping refine a search to topics you’re interested in, but also massively inflates confirmation bias.

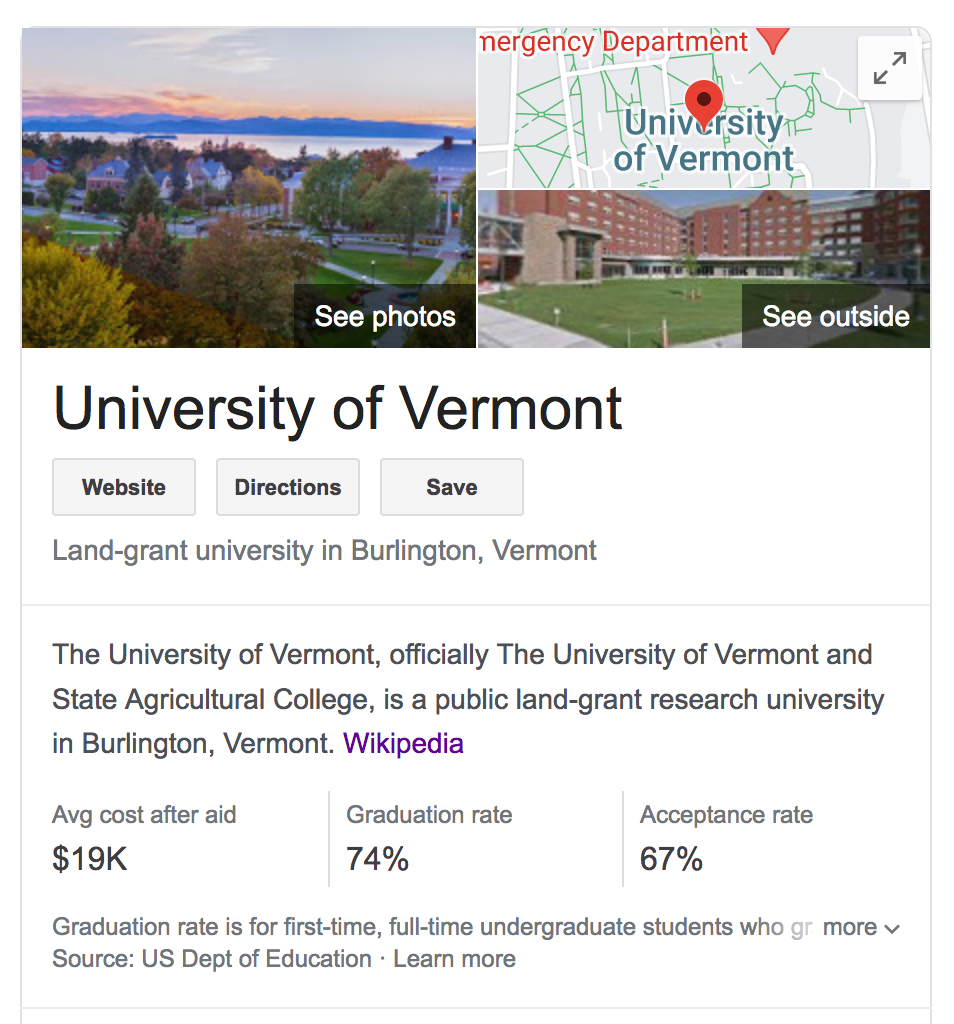

Google knowledge graphs like the one above change a Google result from “returning documents” to “returning information”, blurring the source and legitimacy of information, and further partitioning the web into distinct information spheres, where people can easily find “facts” supporting their existing positions.

Data Voids

“Data voids” are keywords with very few or no existing search results. Because search engines will always try to find the most relevant results, even if the signal is poor, it’s relatively easy to create many documents with these “void keywords” and quickly top the search results. This makes it easy to create an artificial consensus on an obscure topic, then direct people to those keywords to “learn more”. A pretty simple propaganda technique utilizing a weakness of search engines.

Generational Differences

The speakers ended with a discussion on generational differences, especially in how media literacy is taught. Older generations had “trusted sources” that they went to for all news. Students were often told to “seek .org and .edu sites for trustworthy citations”, or before then were told that nothing on the web could be trusted, and print media and academic journals were the only reliable sources. Obviously these are all outdated notions; anyone can register a .org domain, there’s plenty of misinformation in print media, traditionally “trustworthy” sources often fall sway to stories laundered through intermediary publications until they appear legitimate. The “post-social-media youth” see all news sources as untrustworthy, emphasizing a “do your own research” mentality.

Conclusion

I really like this framing of “institutional trust” versus “personal experience and research”. It adds more nuance to misinformation than “online echo-chambers foster disinfo”, or “some communities of people are uneducated”, or “some people are racist and selectively consume media to confirm their biases.” Some people are not confident in their beliefs until they have done research for themselves, and we’ve created a search engine and echo-chamber system that makes it very easy to find reinforcing material and mistake it for consensus, and there are people maliciously taking advantage of this system to promote misinformation for their political gain. Click here for my notes from day 3.