The Efficacy of Subreddit Bans

Posted 7/1/2021

Deplatforming is a moderation technique where high profile users or communities are banned from a platform in an effort to inhibit their behavior. It’s somewhat controversial, because while the intention is usually good (stopping the spread of white supremacy, inciting of violence, etc), the impacts aren’t well understood: do banned users or groups return under alternate names? Do they move to a new website and regroup? When they’re pushed to more obscure platforms, are they exchanging a larger audience for an echo chamber where they can more effectively radicalize the people that followed them? Further, this is only discussing the impact of deplatforming, and not whether private social media companies should have the responsibility of governing our shared social sphere in the first place.

We have partial answers to some of the above questions, like this recent study that shows that deplatformed YouTube channels that moved to alt-tech alternatives lost significant viewership. Anecdotally we know that deplatformed users sometimes try to return under new accounts, but if they regather enough of their audience then they’ll also gather enough attention from the platform to be banned again. Many finer details remain fuzzy: when a community is banned but the users in that community remain on the platform, how does their behavior change? Do some communities respond differently than others? Do some types of users within a community respond differently? If community-level bans are only sometimes effective at changing user behavior, then under what conditions are they most and least effective?

I’ve been working on a specific instance of this question with my fabulous co-authors Sam Rosenblatt, Guillermo de Anda Jáuregui, Emily Moog, Briane Paul V. Samson, Laurent Hébert-Dufresne, and Allison M. Roth. The formal academic version of our paper can be found here (it’s been accepted, but not yet formally published, so the link is to a pre-release version of the paper). This post is an informal discussion about our research containing my own views and anecdotes.

We’ve been examining the fallout from Reddit’s decision to change their content policies and ban 2000 subreddits last year for harmful speech and harassment. Historically, Reddit has strongly favored community self-governance. Each subreddit is administered by moderators: volunteer users that establish their own rules and culture within a subreddit, and ban users from the subreddit as they see fit. Moderators, in turn, rely on the users in their community to report rule-violating content and apply downvotes to bury offensive or off-topic comments and posts. Reddit rarely intervened and banned entire communities before this change in content policy.

Importantly, Reddit left all users on the platform while banning each subreddit. This makes sense from a policy perspective: How does Reddit distinguish between someone that posted a few times in a white supremacist subreddit to call everyone a racist, and someone who’s an enthusiastic participant in those spaces? It also provides us with an opportunity to watch how a large number of users responded to the removal of their communities.

The Plan

We selected 15 banned subreddits with the most users-per-day that were open for public participation at the time of the ban. (Reddit has invite-only “private subreddits”, but we can’t collect data from these, and even if we could the invite-only aspect makes them hard to compare to their public counterparts) We gathered all comments from these subreddits in the half-year before they were banned, then used those comments to identify the most active commenters during that time, as well as a random sample of participants for comparison. For each user, we downloaded all their comments from every public subreddit for two months before and after the subreddit was banned. This is our window into their before-and-after behavior.

Next, we found vocab words that make up an in-group vocabulary for the banned subreddit. Details on that in the next section. Finally, we can calculate how much more or less a user comments after the subreddit has been banned, and whether their comments contain greater or fewer percentage of words from the banned subreddit’s vernacular. By looking at this change in behavior across many users, we can start to draw generalizations about how the population of the subreddit responded. By comparing the responses to different subreddit bans, we can see some broader patterns in how subreddit bans work.

In-Group Language

We want some metric for measuring whether users from a subreddit talk about the same things now that the subreddit has been banned. Some similar studies measure things like “did the volume of hate speech go down after banning a subreddit”, using a pre-defined list of “hate words”. We want something more generalizable. As an example, did QAnon users continue using phrases specific to their conspiracy theory (like WWG1WGA - their abbreviated slogan “Where we go one, we go all”) after the QAnon subreddits were banned? Ideally, we’d like to detect these in-group terms automatically, so that we can run this analysis on large amounts of text quickly without an expert reading posts by hand to highlight frequent terms.

Here’s roughly the process we used:

-

Take the comments from the banned subreddit

-

Gather a random sample of comments from across all of Reddit during the same time frame (we used 70 million comments for our random sample)

-

Compare the two sets to find words that appear disproportionately on the banned subreddit

In theory, comparing against “Reddit as a whole during the same time period” rather than, for example, “all public domain English-language books” should not only find disproportionately frequent terms, but should filter out “Reddit-specific words” (subreddit, upvote, downvote, etc), and words related to current events unless those events are a major focus point for the banned subreddit.

There’s a lot of hand-waving between steps 2 and 3: before comparing the two sets of comments we need to apply a ton of filtering to remove punctuation, make all words singular lower-case, “stem” words (so “faster” becomes “fast”), etc. This combines variants of a word into one ‘token’ to more accurately count how many times it appears. We also filtered out comments by bots, and the top 10,000 English words, so common words can never count as in-group language even if they appear very frequently in a subreddit.

Step 3 is also more complicated than it appears: You can’t compare word frequencies directly, because words that appear once in the banned subreddit and never in the random Reddit sample would technically occur “infinitely more frequently” in the banned subreddit. We settled on a method called Jensen-Shannon Divergence, which basically compares the word frequencies from the banned subreddit text against an average of the banned subreddit’s frequencies and the random Reddit comments’ frequencies. The result is what we want - words that appear much more in the banned subreddit than on Reddit as a whole have a high score, while words that appear frequently in both or infrequently in one sample get a low score.

This method identifies “focus words” for a comunity - maybe not uniquely identifying words, but things they talk about frequently. As an example, here were some of the top vocab words from r/incels before its ban:

femoids

subhuman

blackpill

cucks

degenerate

roastie

stacy

cucked

Lovely. We’ll take the top 100 words from each banned subreddit using this approach and use that as a linguistic fingerprint. If you use lots of these words frequently, you’ve probably spent a lot of time in incel forums.

Results within a Subreddit

If a ban is effective, we expect to see users either become less active on Reddit overall, or that they’ve remained active but don’t speak the same way anymore. If we don’t see a significant change in the users’ activity or language, it suggests the ban didn’t impact them much. If users become more active or use lots more in-group language, it suggests the ban may have even backfired, radicalizing users and pushing them to become more engaged or work hard to rebuild their communities.

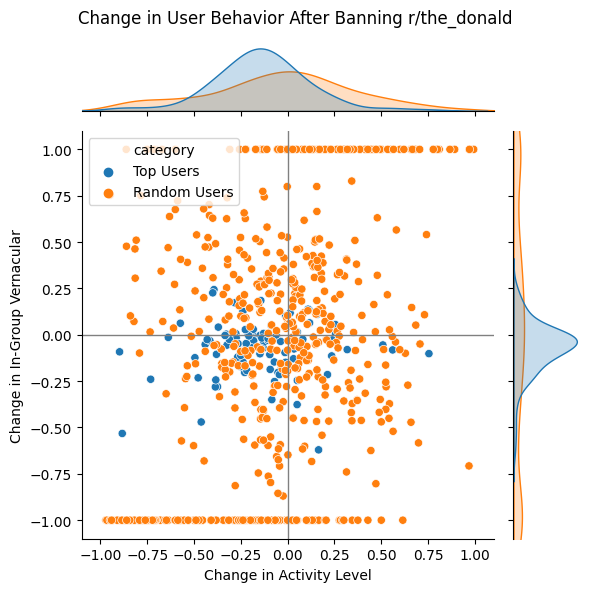

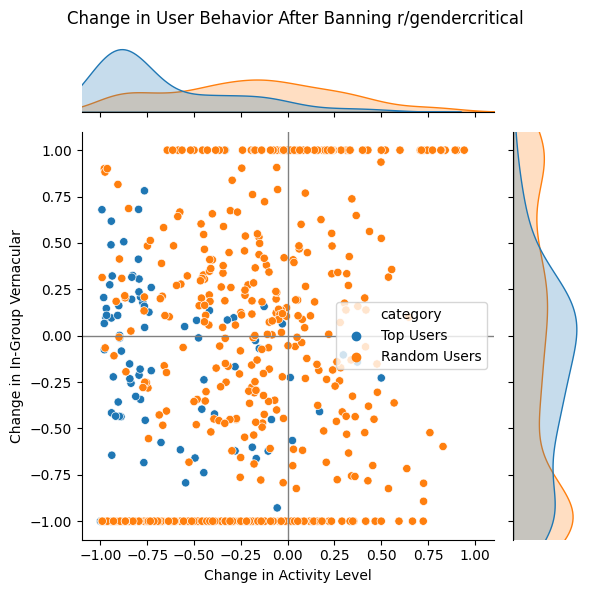

The following scatterplots show user reactions, on a scale from -1 to +1, with each point representing a single user. A -1 for activity means a user made 100% of their comments before the subreddit was banned, whereas +1 would mean a user made 100% of their comments after the subreddit was banned, while a score of 0 means they made as many comments before as after. Change in in-group vernacular usage is similarly scaled, from -1 (“only used vocab words before the ban”) to +1 (“only used vocab words after the ban”), with 0 again indicating no change in behavior. Since many points overlap on top of one another, distribution plots on the edges of the graph show overall trends.

The Donald

For those unfamiliar, r/the_donald was a subreddit dedicated to Donald Trump and MAGA politics, with no official connection to Trump or his team. Many vocab words were related to Republican politics (“mueller”, “illegals”, “comey”, “collusion”, “nra”, “globalist”), with a bend towards ‘edgy online communities’ (“kek”, “cucks”, etc).

Top users from r/the_donald showed almost zero change in in-group vocabulary usage, and only a slight decrease in activity. By contrast, arbitrary users from r/the_donald were all over the place: many didn’t use much in-group vocabulary to begin with, so any increased or decreased word usage throws their behavior score wildly. Random user activity change follows a smooth normal distribution, indicating that the ban had no effect. For both top posters and random users, the ban seems to be ineffectual, but this contrasts with our next subreddit…

Gendercritical

r/gendercritical was a TERF subreddit - ostensibly feminist and a discussion space for women’s issues, but visciously anti-trans. Vocabulary includes feminist topic words (“misogyny”, “patriarchy”, “radfem”, “womanhood”), plus “transwomen”, “intersex”, a number of gendercritical-specific trans slurs, and notable mention “rowling”.

Here we see markedly different results. A large number of the top r/gendercritical users dramatically dropped in activity after the subreddit ban, or left the platform altogether. Some of those who remained stopped using gendercritical vocab words, while others ramped up vocabulary usage. Random users once again show a normal change in activity, indicating no impact, with a marked number of users that stopped all usage of gendercritical vocabulary.

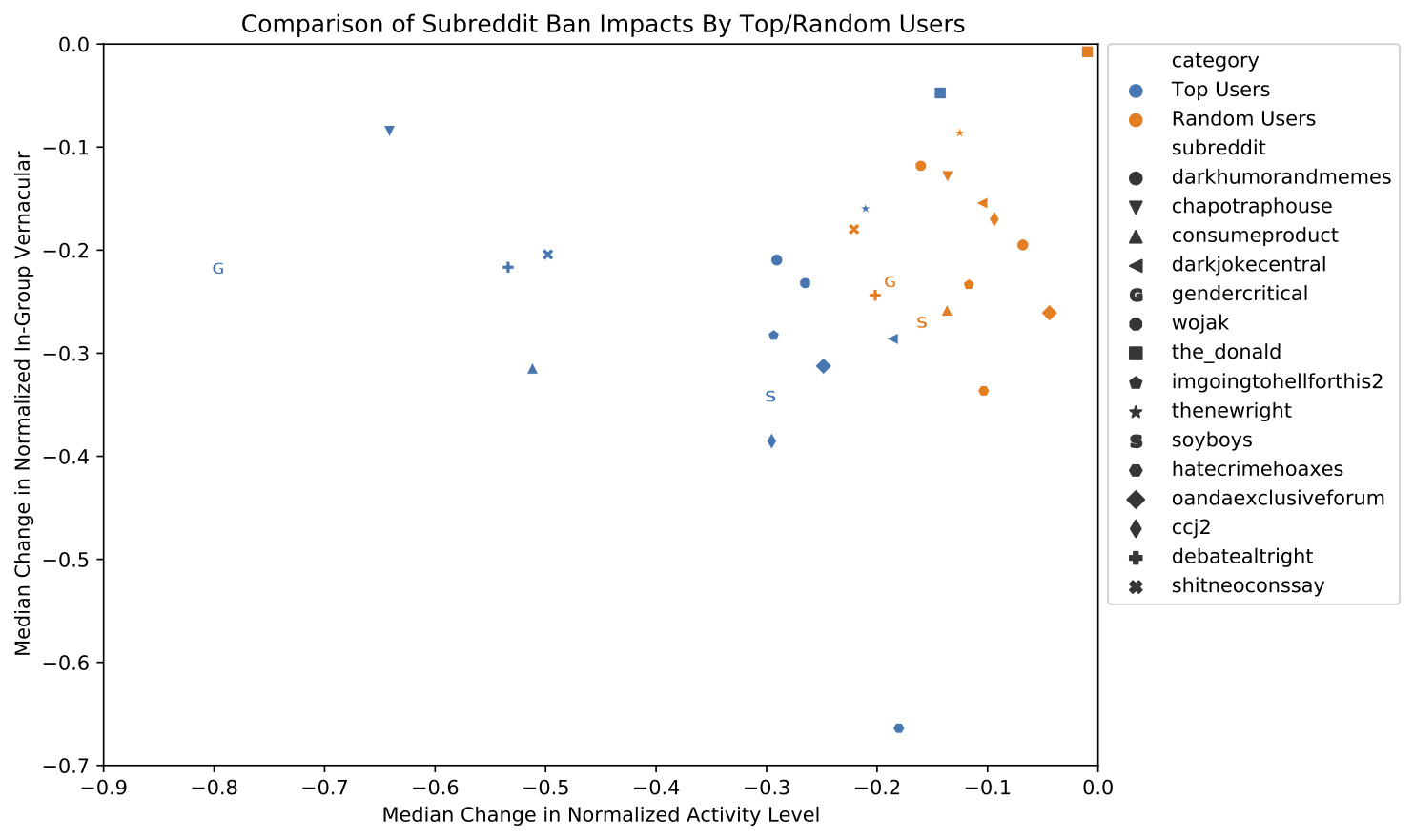

Subreddit Comparison

Rather than share all 15 subreddit plots in detail (they’re in the supplemental section of the research paper if you really want to look!), here’s a summary plot, showing the median change in vocabulary and activity for top/random users from each subreddit.

This plot indicates three things:

-

Top users always drop in activity more than random users (as a population - individual top users may not react this way)

-

While vocabulary usage decreases across both populations, top users do not consistently drop vocabulary more than random users

-

Some subreddits respond very differently to bans than others

That third point leads us to more questions: Why do some subreddits respond so differently to the same banning process than others? Is there something about the content of the subreddits? The culture?

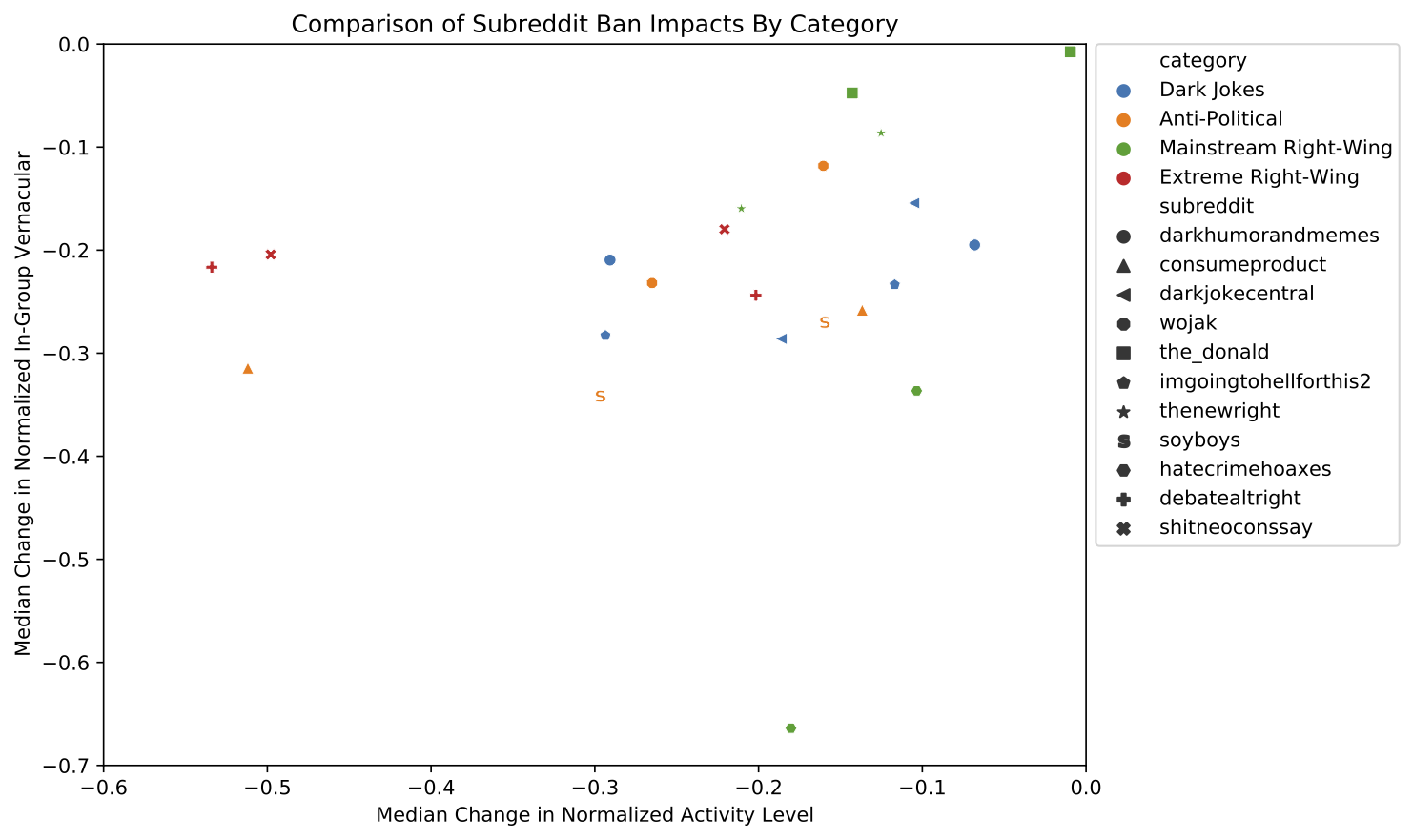

Subreddit Categorization

In an effort to answer the above questions, we categorized subreddits based on their vocabulary and content. We drew up the following groups:

| Category | Subreddits | Description |

|---|---|---|

| Dark Jokes | darkjokecentral, darkhumorandmemes, imgoingtohellforthis2 | Edgy humor, often containing racist or other bigoted language |

| Anti Political | consumeproduct, soyboys, wojak | Believe that most activism and progressive views are performative and should be ridiculed |

| Mainstream Right Wing | the_donald, thenewright, hatecrimehoaxes | Explicitly right-wing, but clearly delineated from the next category |

| Extreme Right Wing | debatealtright, shitneoconssay | Self-identify as right-wing political extremists, openly advocate for white supremacy |

| Uncategorized | ccj2, chapotraphouse, gendercritical, oandaexclusiveforum |

(Note that the uncategorized subreddits aren’t necessarily hard to describe, but we don’t have enough similar subreddits to make any kind of generalization)

Let’s draw the same ban-response plot again, but colored by subreddit category:

Obviously our sample size is tiny - some categories only have two subreddits in them! - so results from this plot are far from conclusive. Fortunately, all we’re trying to say here is “subreddit responses to bans aren’t entirely random, we see some evidence of a pattern where subreddits with similar content respond kinda similarly, someone should look into this further.” So what do we get out of this?

| Category | Activity Change | Vocabulary Change |

|---|---|---|

| Dark Jokes | Minimal | Minimal |

| Anti Political | Top users decrease | Top users decrease |

| Mainstream Right Wing | Minimal | Inconsistent |

| Extreme Right Wing | All decrease significantly, especially top users | Minimal |

The clearest pattern for both top and random users is that “casual racism” in dark joke subreddits is the least impacted by a subreddit ban, while right wing political extremists are the most effected.

What have we Learned?

We’ve added a little nuance to our starting question of “do subreddit bans work?” Subreddit bans make the most active users less active, and in some circumstances lead users to largely abandon the vocabulary of the banned group. Subreddit response is not uniform, and from early evidence loosely correlates with how “extreme” the subreddit content is. This could be valuable when establishing moderation policy, but it’s important to note that this research only covers the immediate fallout after a subreddit ban: How individual user behavior changes in the 60 days post-ban. Most notably, it doesn’t cover network effects within Reddit (what subreddits do users move to?) or cross-platform trends (do users from some banned subreddits migrate to alt-tech platforms, but not others?).